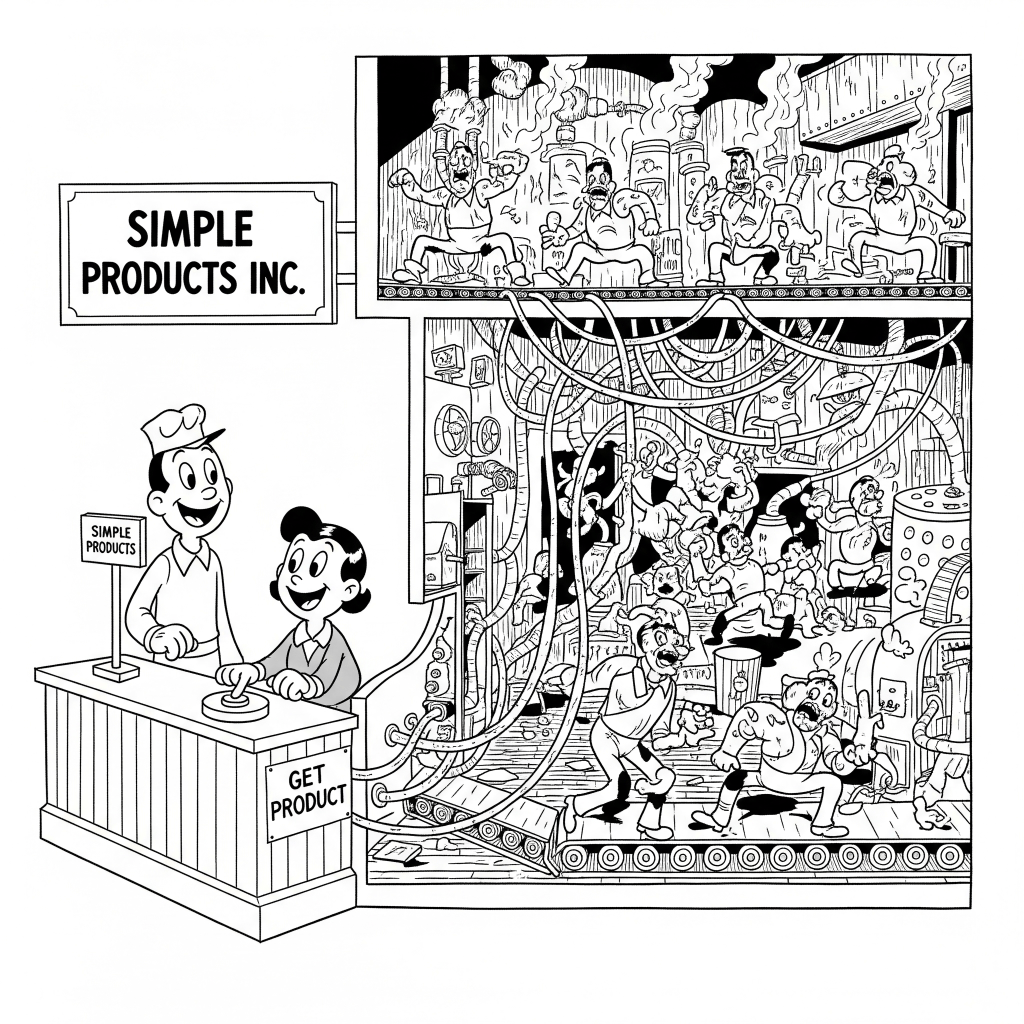

Imagine you are building a factory to manufacture cars. You need complex, specialized components like the engine, or parts that require a multi-step process to manufacture, like the tyres.

If the engine supplier were to simply dump a pallet of raw, unassembled parts on your factory floor, your car assembly line would have to stop while your workers frantically tried to figure out how to build the engine, test it, and prepare it for installation. Your factory would have to become an expert in engine assembly, a job it was never designed to do.

Similarly, tyre manufacturing is a multistep process requiring high heat for vulcanization. That’s an environment you won’t want to accommodate in your factory space.

In both cases, your factory is forced to do the hard, specialized work of preparing raw materials. You will not be efficient.

AI Applications – An Assembly Of Model, Tools, and Data

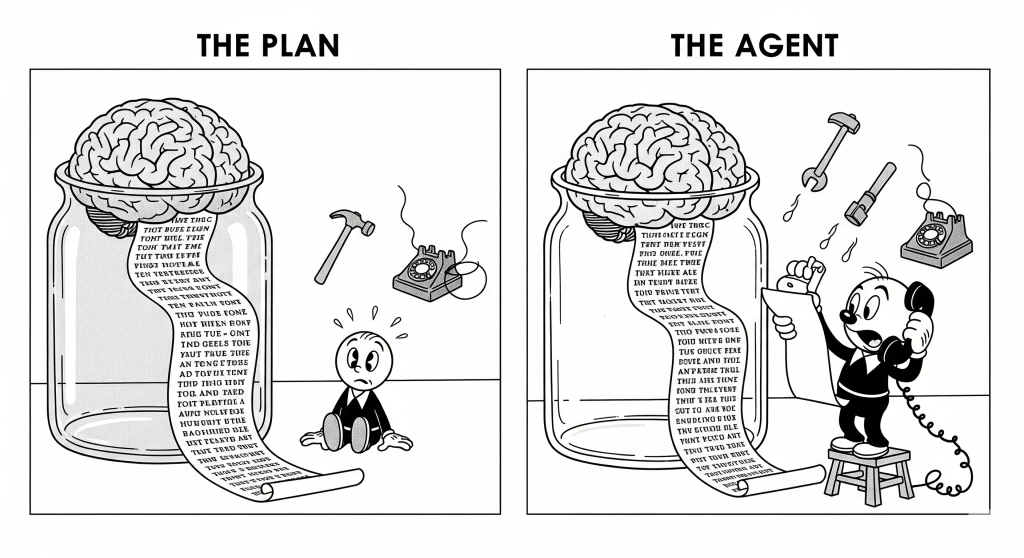

Building an AI application is much like designing a modern assembly line. In both cases, you assemble different components to generate your ultimate product. For today’s AI, this means integrating three key components: the core model (like an LLM), a set of external tools, and a constant flow of data.

To make this assembly truly functional, models are now trained in “tool calling.” This technique allows the LLM to overcome its static knowledge by recognizing when a query requires help from an external tool—like an API or a database. The model learns to call the necessary tool and integrate its response, transforming itself from a simple text generator into a dynamic agent that can act on live data.

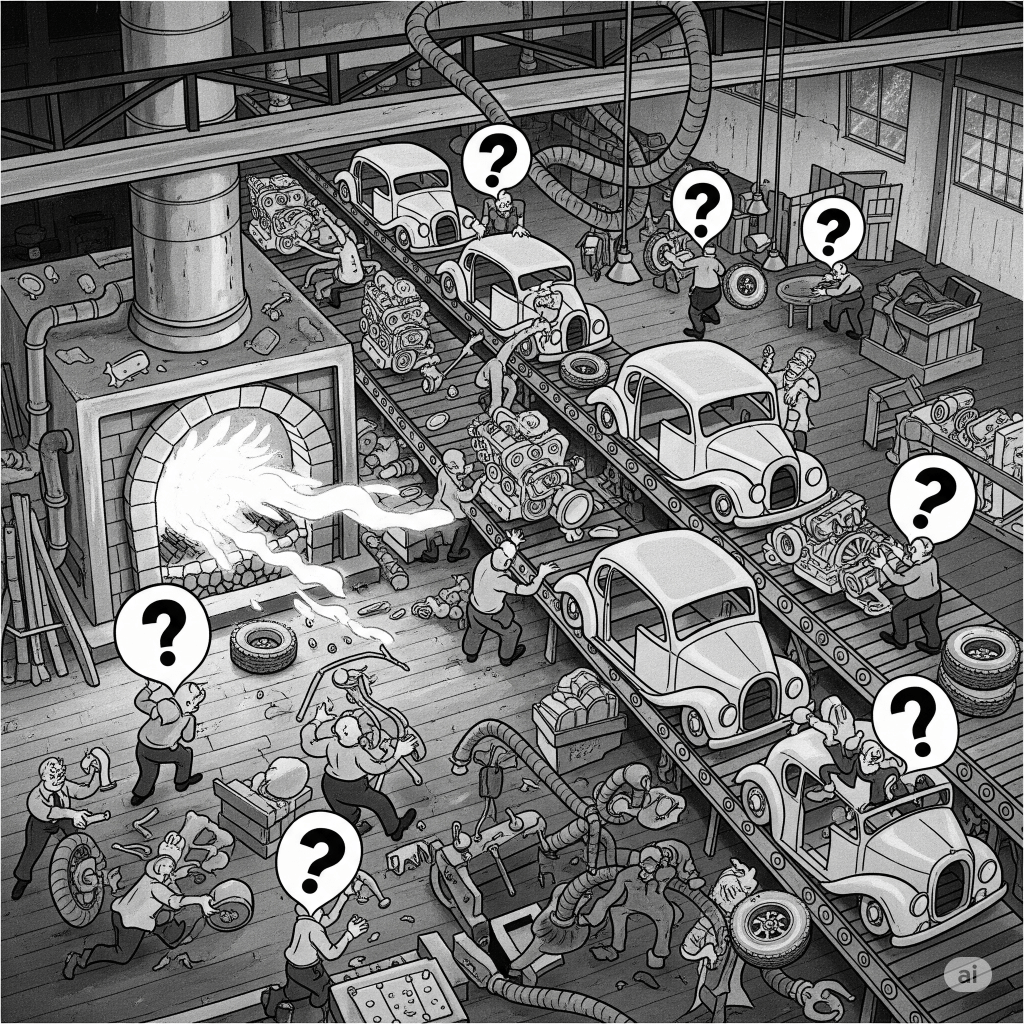

However, this powerful feature creates a significant, hidden burden for the developer. While the AI calls the tool, the developer is responsible for building and maintaining the entire environment for it. For every single tool, they must manage its specific dependencies, understand its unique authentication and data formats, and write brittle “glue code” to make it compatible with the main application. This is like designing a chaotic assembly line where every machine needs a different power outlet, its own specialized mechanic, and a unique instruction manual. It is highly inefficient and bloats the core application with third-party complexity.

This is precisely the problem the Model Context Protocol (MCP) is designed to solve.

MCP: Solving Complexity Through Standardization and Compartmentalization

The Model Context Protocol (MCP) solves this problem by simultaneously introduces two powerful concepts: a universal standard for integration—the equivalent of a universal utility port for every workstation (usb c for AI apps) —and a decoupled architecture where each tool operates in its own self-contained environment. This combination of a standard interface and a compartmentalized structure is what provides the key benefits.

1. Simplified Integration Through Standardization

First, MCP provides a common language for how an AI application communicates with any tool. While API specifications have existed before, MCP standardizes the layer above that: the protocol and context an AI agent needs to reliably call a tool, understand its capabilities, and use its output. This dramatically simplifies the initial work of integrating a new tool into the assembly line.

2. Independent Evolution Through Compartmentalization

Second, and arguably more powerful, is how MCP forces a clean separation between the application and the tool (or prompt, or resources). An MCP Server is a self-contained application. This means the AI application doesn’t need to know anything about the tool’s internal environment, its programming language, or its software dependencies. All of that complexity is managed entirely within the MCP Server.

This creates a clear and powerful division of labor, which brings us back to the assembly line. The engine manufacturer can completely re-design their own factory—using new machines, new software, and new processes. But as long as the final engine they ship has the same standard mounting points and data connectors, your car factory’s assembly line doesn’t need to change at all. The engine can evolve independently.

Similarly, a tool provider can completely update their service and its dependencies within their own MCP Server. As long as the MCP interface remains consistent, the AI application that calls it requires no modification. This decoupling is what allows for a truly scalable and maintainable ecosystem, where developers can build complex applications by assembling robust, independent components without inheriting their internal complexity.

MCP is not a fad, it is certain to happen

This brings us to a concluding thought, one that could serve as the title for this entire post: The Model Context Protocol is not a temporary fad; it is an evolutionary concept for building complex systems.

The reason for this is simple: if MCP didn’t exist, something like it would have to be invented. The principles it embodies—compartmentalization and specialization—are fundamental to solving complexity. We see this pattern repeated everywhere, in both natural and man-made systems.

We see this most profoundly in biology, a system that has been self-optimizing for millions of years. Evolution itself discovered that the most robust path to creating complex organisms was through compartmentalization: specialized cells form tissues, and tissues form organs. Each component hides its immense internal complexity, communicating and collaborating through standardized biological and chemical signals.

We see it in modern software architecture, where developers have moved from monolithic applications to microservices—small, independent services that communicate through standardized APIs, allowing each one to evolve without breaking the entire system. We even see it in global trade with the invention of the simple shipping container. Before this standard interface, logistics were a nightmare of custom work. The container allowed the entire global system of ships, cranes, and trucks to specialize and scale.

In every case, a standard interface enables a clear division of labor, allowing a system to grow in sophistication without collapsing under its own weight.

MCP is the application of this universal, time-tested principle to the assembly line of AI. This is not theoretical. The value of this compartmentalized approach is why major industry players like Google, Microsoft, OpenAI, and Anthropic are rapidly adopting MCP.

All That Glitter Is Not Gold

While the Model Context Protocol (MCP) presents a compelling vision, its initial design appears to prioritize functionality at the expense of a robust security framework. The current specifications leave key implementation details ambiguous, such as mandating OAuth 2.1 for authorization—a protocol that has yet to see wide industry adoption. Furthermore, researchers have identified critical risks, including prompt injection, the potential exposure of credentials from MCP servers, and supply chain attacks via malicious third-party tools. As the ecosystem evolves to mitigate these threats, security must remain a paramount consideration for developers adopting the protocol.

P.S – This is a high level opinion on MCP. Stay tuned for future articles with actual technical examples and description!

Leave a comment